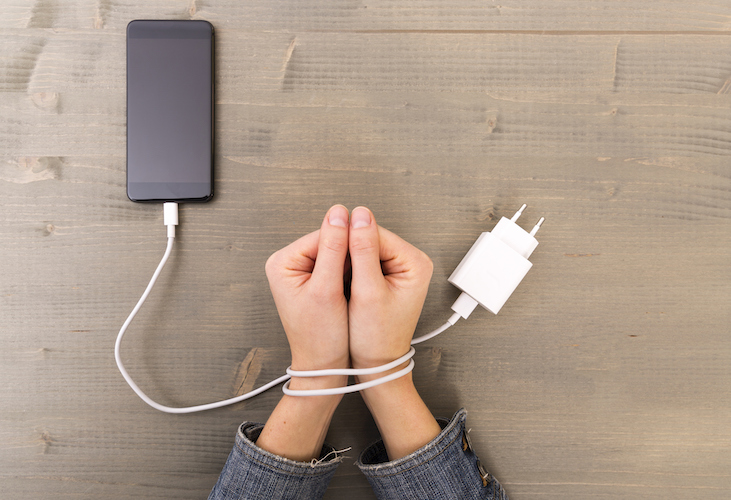

Scholar argues that the government should regulate app developers to prevent technology addiction.

On average, Americans touch their phones more than 2,600 times per day.

App developers drive this obsession by creating addicting phone applications that can generate revenues from advertisers. Former Facebook executives have even admitted that they have created more design features such as the “like” button and a news feed with an “endless scroll” to exploit weaknesses in human psychology.

In a recent paper, Kyle Langvardt, an associate professor at the University of Detroit Mercy School of Law, argues that the federal government should regulate predatory design practices that tend to foster behavioral addiction.

Langvardt explains that habit-forming app designs can cause serious financial problems for vulnerable users who become addicted to the product. He also describes how the algorithms that drive the addictive components of the apps promote group identification and “angry tribal politics.” These algorithms eliminate user discretion in their content selection by pre-defining user interests.

For example, although it seems as though users can select videos from a seemingly unlimited universe of content on YouTube, these users are really selecting content from a pre-determined list based on their search history.

Because app companies’ algorithms send users polarizing content that aligns with their predicted ideologies, Langvardt cautions that users have become more radical and closely tied to their beliefs. Regulating habit-forming technology would thus enhance the quality of online speech by promoting more honest research and access to a wider range of sources.

To prevent overuse, Langvardt recommends that the government adopt a labeling strategy requiring tech products to display messages about the risks associated with tech addiction and overuse. For example, a Snapchat warning could inform users about the streak feature—a goal-setting tactic used to encourage daily use—which exploits human social obligation tendencies.

Alternatively, Langvardt proposes that apps be required to report the amount of time users have logged, either within one session or within the span of a day or week. Apps could also display data about a user’s likes, logins, swipes, or taps.

A more stringent requirement would require apps to detect patterns of problem usage and advise users to disengage. Langvardt recognizes that some apps allow users to set self-imposed limits by setting timers or disabling notifications temporarily. But he explains that these measures are hidden within settings menus and are turned off by default. He urges regulators to require app developers to put these functions in the foreground of the user interface and turn them on by default.

Langvardt recommends that regulators consider prohibiting features that stimulate compulsive use, such as simple aesthetic choices like bright red notifications or animations. Alternatively, regulators could require that developers implement counter-addictive design features, such as mandatory breaks after long periods of use, says Langvardt.

Washington State’s gambling laws are an encouraging example, Langvardt says. These laws have been interpreted to cover some casino-themed mobile games. In addition, some Asian countries have implemented anti-addiction requirements to deter extended play by prohibiting minors from playing after midnight. The Chinese government also requires developers to make games less enticing after long periods of consecutive use by reducing the value of in-game rewards.

Langvardt presumes that the Federal Trade Commission is best-suited to protect consumers against habit-forming technology by using its power to police “unfair and deceptive practices” or by adapting its privacy approach to the technology.

Instead of placing restrictions on the apps themselves, Langvardt says that legislatures could reduce the incentive to make addictive technologies in the first place. Restricting targeted advertising or setting caps on the revenues earned from advertising would discourage the production of time-consuming games or social media apps. Moreover, limiting user expenditures on habit-forming technologies to $100 each month, for example, would deter predatory design practices.

Langvardt admits that the government should not make value judgments to prohibit certain activities. His proposed regulation would simply make product designs less manipulative and would enhance users’ freedom of choice. He points out that governments regulate other nuisances that impact people’s quality of life when they enact laws that regulate spam mail or impose local property ordinances on shrubbery and house paint. He emphasizes that preserving general quality of life is a valid regulatory concern.

Langvardt recognizes that much of his proposed regulations would likely face First Amendment scrutiny, since social media, video games, and computer code have all been understood by the courts as a form of protected expression. But current law does not address the technical components involved in habit-forming technology, Langvardt says.

Although developers’ efforts to create addictive technology have not received the attention of a high-profile matter like the opioid epidemic, Langvardt cautions that these technologies may become more threatening in the coming years. By taking habit-forming technologies seriously now, the government can confront these issues pragmatically, making necessary adjustments as further complications arise.