Organizations should tailor contract requirements for procured AI systems based on levels of risk.

Responsibly procuring and deploying artificial intelligence (AI) systems is a complex endeavor.

Through our work at the Responsible AI Institute, we have helped the Joint Artificial Intelligence Center (JAIC) at the U.S. Department of Defense develop foundational procurement protocols that embody the agency’s AI Ethics Principles. We have also supported companies in the financial services and health care industries as they develop their responsible AI procurement processes.

Although each context poses different and important issues, our experiences suggest that tailoring contract requirements for procured AI systems to the level of risk created by each system is an effective way to grapple with three major challenges that organizations face in responsibly procuring and deploying AI systems. These challenges include developing organizational responsible AI capacity, navigating legal uncertainty and addressing general information technology procurement issues.

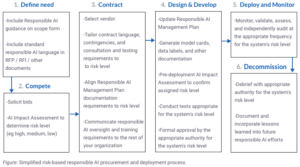

To use this risk-based approach, an organization should assign a risk level to an AI system—such as high, medium or low—based on the results of an organization’s AI impact assessment during an early phase of the procurement process.

If an organization is procuring an already developed system, AI impact assessment results may be provided as part of the bid. If an organization is creating an AI system with vendor help, it should conduct an AI impact assessment once the details of the system are known. An AI system’s risk level should reflect risks to the people impacted by the system as well as risks to the organization.

Although the contents of an organization’s AI impact assessment will vary by organization and context, they should always include considerations related to accountability; robustness, safety, and security; bias and fairness; system operations; explainability and interpretability; and consumer protection. These foundational categories form the basis of our Responsible AI Implementation Framework.

Once assigned, an AI system’s risk level should guide the remainder of the procurement and deployment process (see figure). For example, while all AI systems should undergo testing before and during deployment, the extent of the testing, the approval authority that greenlights deployment, the frequency of testing during deployment and documentation requirements—such as responsible AI management plan details and frequency of updates—should all be determined by the risk level of the AI system.

Responsible AI programs at most organizations are in an early stage of maturity. Since the functioning of AI systems can be unpredictable and difficult to understand, responsibly deploying AI requires an organization to develop capacity in the form of new contract requirements. These requirements can take a variety of shapes and include assessments such as an AI impact assessment, documentation requirements like the responsible AI management plan, governance processes, policy frameworks and training programs.

As an organization develops responsible AI capacity, adopting a risk-based approach to AI procurement can promote a thoughtful and measured understanding of AI within different parts of an organization, overstating neither the benefits nor the risks of AI systems.

Since AI systems are generally not subject to blanket regulation and industry-specific AI laws are often short on details, legal requirements for AI systems can be difficult to determine.

For example, legislation in New York City requiring that any automated hiring system used on or after January 1, 2023 undergo a bias audit consisting of an “impartial evaluation by an independent auditor,” including testing to assess potential disparate impact to certain groups, does not further specify what kinds of discrimination to test for, what criteria to test for and how often to test.

By incorporating regulatory risk considerations into the initial AI impact assessment, organizations can consider the legal and compliance implications of deploying an AI system early in the procurement process, reducing the need for expensive and time-consuming interventions later in the system’s life cycle.

In addition to regulatory risks to an organization, carefully reviewing proposed and enacted AI-specific laws and regulations also gives insight into the potential harms to people that regulators are seeking to address. The risks of these potential harms, too, should be thoughtfully incorporated into the AI impact assessment.

For instance, an organization’s AI impact assessment for an AI system related to hiring should gauge its compliance with Equal Employment Opportunity Commission guidance on how such systems may violate the Americans with Disabilities Act, Illinois’ notification and consent requirement for AI video interviews, Maryland’s notification and consent requirement for the use of facial recognition in video interviews and New York City’s aforementioned bias audit requirement for automated hiring systems. More generally, it should address the potential fairness, notification, transparency, recourse and effectiveness issues that are driving these regulations.

Adopting a risk-based approach to procurement also allows an organization to incorporate contracting language that aligns with emerging laws, best practices and certification standards. For example, the European Union’s proposed Artificial Intelligence Act, the National Institute of Standards and Technology’s draft AI Risk Management Framework, Canada’s proposed Artificial Intelligence and Data Act and our Responsible AI Institute Certification Program—which is currently under review by national accreditation bodies—all reflect increasingly sophisticated understandings of responsible AI implementation.

Efforts to responsibly procure and deploy AI systems often bring into sharper focus well-known IT procurement issues, including building the organizational expertise to manage external teams, preventing vendor lock-in and providing an even playing field for suppliers of different sizes.

For example, while startups that provide AI solutions are sometimes more current in their understanding of responsible AI considerations and can be faster to adapt to new types of contract requirements, established technology companies can often use existing inroads with organizations to outmaneuver new entrants.

Adopting a risk-based approach to procurement and clearly communicating it to vendors can help address such issues by giving the procuring organization advance notice of the specific oversight capabilities it will need in future stages of the system lifecycle, preventing vendors from presenting intellectual property arguments against required testing, monitoring and auditing of their AI systems going forward and rewarding vendors—of all sizes—that are more advanced and responsive in their responsible AI efforts.

This essay is part of a nine-part series entitled Artificial Intelligence and Procurement.